"Best Practices in Testing & Auditing AI Systems" webinar: The Recap

07/06/2023

4 MIN READ /

A few weeks ago, a combined team of experts from TÜVIT and code4thought hosted a webinar titled “Best Practices for Testing & Auditing AI systems”. During this event, we aimed to address the following topics:

- The challenges and risks of Artificial Intelligence (AI) systems and forthcoming legislations,

- Key characteristics of AI testing,

- Methods to incorporate the latest techniques for detecting and mitigating potential risks and vulnerabilities,

- Ways to integrate AI testing in certification schemes,

- Limitations of AI testing and auditing, and future prospects.

What follows is a recap of the webinar, drawing on the insights shared and the questions asked by the participants.

Starting with the definition of what constitutes an AI system, it is clear that the European Union (EU) is getting closer to the definition provided by the Organisation of Economic Cooperation and Development (OECD). This suggests that the forthcoming EU AI Act will consider an AI system as a:

“…machine-based system that can, for a given set of human-defined objectives make:

- predictions,

- recommendations, or

- decisions

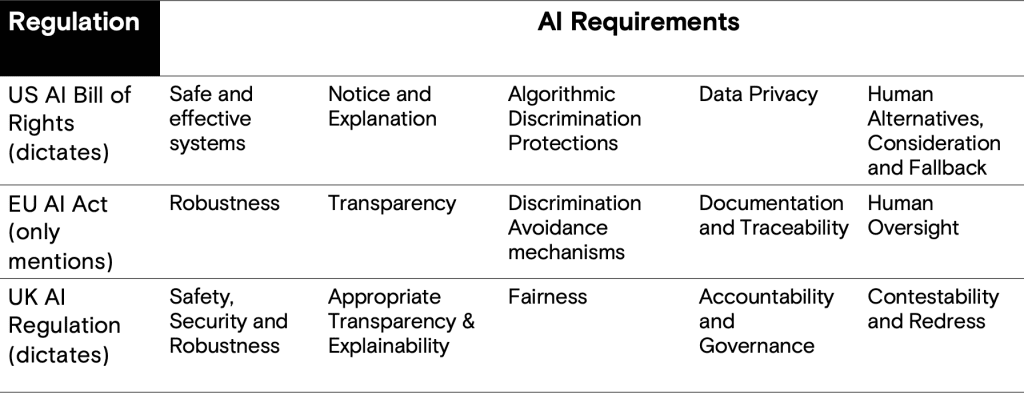

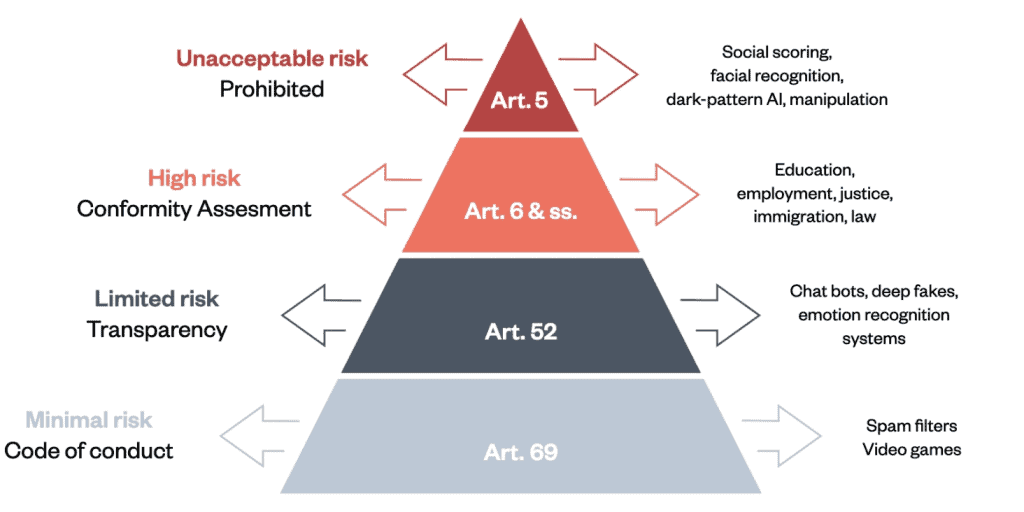

With this in mind, the EU expects that this adoption will help to ensure legal certainty, harmonisation and wide acceptance of the legislation. Compared to the upcoming legislations in the US (blueprint for an AI Bill of Rights) and the UK (a pro-innovation approach to AI Regulation), the EU seems to be taking a risk-based approach instead of a use-case-based one.

Another divergence, as also depicted below, is that both the US and UK proposed regulations explicitly mandate the following principles that an AI system must adhere to:

- Safety & Security,

- Transparency,

- Fairness,

- Privacy & Accountability,

- Redress.

On the other hand, the EU AI Act mentions these principles, but does not enforce them as rigidly defined requirements that all AI systems must abide by. Rather, only high-risk systems are expected to undergo a conformity assessment, part of which involves evaluating the system against the above-mentioned requirements.

These principles seem to be omnipresent (in one form or another) in all forthcoming regulations, which is why a significant portion of the webinar was dedicated to them.

The primary focus of this webinar, however, was to demonstrate that AI requirements/principles such as fairness, transparency and security can be tested/audited using concrete criteria (e.g. Disparate Impact Ratio for bias testing as required by the NYC Bias Law) and following structured processes characterized by:

- A specific timeline or deadline to ensure efficiency and prevent indefinite prolongation. This helps guarantee timely project completion, allowing for swift implementation of the recommendations.

- Minimal intrusiveness to prevent disruption to the organization’s daily operations and to respect their resources and staff.

- A fact-based approach rooted in accepted industry standards. This ensures the audit is rigorous, reliable, credible, and yields actionable recommendations suitable for the organisation’s needs. For instance, standards like ISO 29119-11 and ISO 4358 can be employed for such audits.

- A progression beyond diagnosis. The identification of areas for improvement and practical recommendations serves as the identified areas for improvement alongside practical recommendations will facilitate the organisation to achieve its goals. A thorough audit also includes a follow-up component where the auditing monitors improvements and collaborates closely with the implementing team, offering ongoing advice.

Particularly in the realm of Safety & Security, an audit can be structured in a way that leads to a formal certification. More precisely, given a set of existing (and obligatory) regulations and standards, specific requirements can be defined for the AI system to be tested against and, if successful, gain certification. While formal certification is only granted when the audit is conducted by an accredited evaluation facility, such as TÜVIT, both TÜVIT and code4thought can assist with and pre-audit a system’s implementation under certain formal requirements. This helps ensure a smooth process during the certification phase.

Unlock Exclusive Content: Get the Webinar Presentation

Fill in the form and you will receive an email with access to the webinar presentation :

[cf7form cf7key=”webinar”]