Navigating Compliance & Risks in

AI-Driven Financial Services

In the world of financial services, where innovation and technology are advancing at breakneck speed, the integration of artificial intelligence (AI) has brought about transformative changes. While promising, these changes also introduce many risks and regulatory challenges that demand the vigilant attention of auditors and risk managers.

AI in Financial Services: Use Cases and Risks

In the digital age, banking and financial institutions must prioritize customer experience to succeed in business. The customer experience should be contextual, personalized, and tailored to gain a primary competitive advantage. Artificial Intelligence (AI) is the breakthrough technology that can help achieve this goal.

A survey conducted by The Economist Intelligence Unit revealed that 77% of bankers believe that unlocking the value of AI will be the critical factor in determining the success or failure of banks. Moreover, a McKinsey survey conducted in 2021 found that 56% of banking executives used AI in at least one function of their organizations.

It is also essential to note that customer experience includes a sense of safety. Customers want to feel safe that their financial and personal data are secure. Hence, auditors and risk managers must be well-versed in the business applications of AI and the associated risks and how to balance customer experience with risk mitigation.

The most notable AI use cases include the following:

- Risk Assessment and Management: AI’s ability to analyze vast datasets for credit assessments and real-time risk predictions can be a game-changer. However, it also introduces risks related to model accuracy and data quality, which auditors must scrutinize.

- Customer Service: AI-driven chatbots and virtual assistants can redefine customer experience and enhance customer interactions but pose risks regarding data privacy, ethical use, and potential customer dissatisfaction.

- Algorithmic Trading: AI-driven trading algorithms optimize investment strategies but may carry significant market and operational risks, requiring rigorous auditing and risk assessment.

- Fraud Detection: While AI can excel in fraud detection, it can also produce false positives and negatives, making risk assessment a complex task for auditors.

- Portfolio Management: AI-driven portfolio management tools offer potential gains but also introduce risks related to the transparency and explainability of investment decisions.

Challenges of AI Adoption in Financial Services

Despite the inspiring prospects that AI technology opens up for improving the customer experience in banking, implementing it into banking products can pose some challenges.

- Data Privacy and Security: The amount of data collected in the banking industry is vast and needs adequate security measures to avoid breaches or violations. Auditors must ensure that AI applications comply with stringent data protection regulations and assess cybersecurity measures.

- Bias and Fairness: Risk managers have a critical responsibility of evaluating and minimizing any potential biases present in the AI models to avoid any associated legal or reputational risks. Doing so ensures that the AI models are fair and unbiased and do not discriminate against any particular group or individual. This is essential for building customer trust and maintaining a positive brand image.

- Interoperability: When incorporating AI into existing banking systems, it is crucial to carefully assess interoperability to avoid disruptions to daily operations. This includes evaluating compatibility between the AI technology and existing (legacy) systems so that they can work seamlessly together.

- Regulatory Compliance: Financial institutions must navigate a complex web of regulations, necessitating rigorous auditing to verify compliance with AI deployments.

The latter – regulatory compliance – is of utmost importance since existing and upcoming regulations and laws shape how banks should process the data required for training AI-based banking systems and deploy these AI systems responsibly.

Impact of Regulations on Auditing and Risk Management

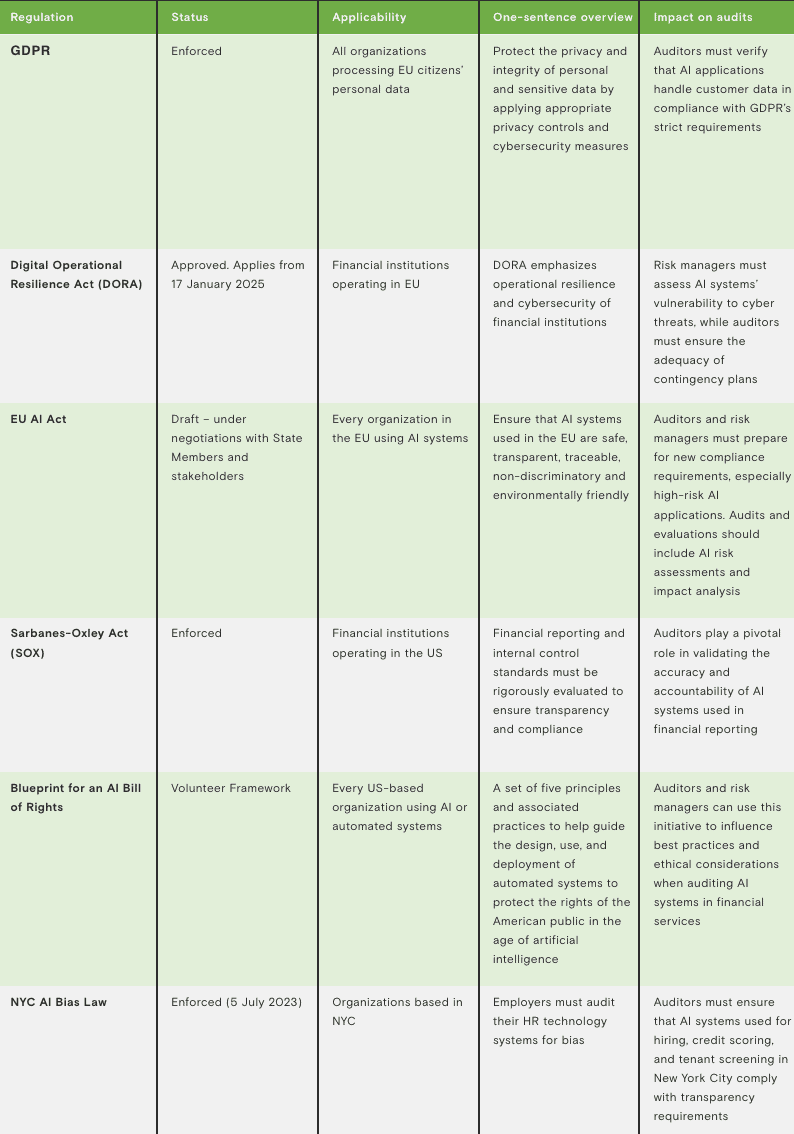

As discussed before, banks and financial institutions operate in a highly regulated environment because they are part of a nation’s critical infrastructure and a popular target for individual cybercriminals or state-sponsored cybercrime. Governments have enacted or are about to adopt regulations to protect the financial sector against technology-enabled threats, including cyber-attacks, AI algorithmic bias and fairness, and privacy compromise. Auditors and risk managers should be aware of the requirements of each law and how these requirements impact the deployment of AI banking solutions.

The following table provides an overview of the most prominent EU and United States regulations and their impact.

Regulation

GDPR

Digital Operational Resilience Act (DORA)

EU AI Act

Sarbanes-Oxley Act (SOX)

Blueprint for an AI Bill of Rights

NYC AI Bias Law

Status

Enforced

Approved. Applies from 17 January 2025

Draft – under negotiations with State Members and stakeholders

Enforced

Volunteer Framework

Enforced (5 July 2023)

Applicability

All organizations processing EU citizens’ personal data

Financial institutions operating in EU

Every organization in the EU using AI systems

Financial institutions operating in the US

Every US-based organization using AI or automated systems

Organizations based in NYC

One-sentence overview

Protect the privacy and integrity of personal and sensitive data by applying appropriate privacy controls and cybersecurity measures

DORA emphasizes operational resilience and cybersecurity of financial institutions

Ensure that AI systems used in the EU are safe, transparent, traceable, non-discriminatory and environmentally friendly

Financial reporting and internal control standards must be rigorously evaluated to ensure transparency and compliance

A set of five principles and associated practices to help guide the design, use, and deployment of automated systems to protect the rights of the American public in the age of artificial intelligence

Employers must audit their HR technology systems for bias

Impact on audits

Auditors must verify that AI applications handle customer data in compliance with GDPR’s strict requirements

Risk managers must assess AI systems’ vulnerability to cyber threats, while auditors must ensure the adequacy of contingency plans

Auditors and risk managers must prepare for new compliance requirements, especially high-risk AI applications. Audits and evaluations should include AI risk assessments and impact analysis

Auditors play a pivotal role in validating the accuracy and accountability of AI systems used in financial reporting

Auditors and risk managers can use this initiative to influence best practices and ethical considerations when auditing AI systems in financial services

Auditors must ensure that AI systems used for hiring, credit scoring, and tenant screening in New York City comply with transparency requirements

Auditors and risk managers in the financial services industry are tasked with the critical responsibility of mitigating risks associated with AI adoption while ensuring compliance with an ever-evolving regulatory landscape. As AI reshapes financial services, these professionals play a pivotal role in safeguarding the integrity and security of financial institutions.

Auditing as we knew it is no longer applicable in the AI era. Auditors and auditing bodies must acquire new skills and tools to help them assess and evaluate established AI systems and algorithms and ensure regulatory compliance. These include proficiency in AI ethics and bias assessment, enabling them to critically evaluate the fairness and ethical considerations within AI systems. Understanding AI interpretability and explainability tools is crucial for comprehending complex model predictions and ensuring transparency. Auditors must also harness the power of AI-specific audit frameworks and testing tools designed for evaluating system performance, security, and regulatory compliance.

By staying updated on emerging AI regulations and engaging in continuous learning rounds, they can help organizations reap the benefits of AI while minimizing potential pitfalls.