From Guidance to Governance: Leading with OWASP’s AI Maturity Assessment

10/09/2025

16 MIN READ /

AI is surrounded by hype and promises that it will make businesses more productive and efficient. However, the reality check is a bit more trickier. A recent report from MIT shows that 95% of GenAI pilots fail because businesses avoid friction. “Smooth demos impress, but without governance, memory, and workflow redesign, they deliver no value. The companies that succeed are those that engineer for friction, calibrating it rather than eliminating it,” explains Jason Snyder for Forbes.

The lesson learned? Better prioritization, governance, and careful, targeted, and selective adoption are needed. The question now is, how can organizations reduce this failure rate?

This is where the OWASP AI Maturity Assessment (AIMA) framework enters the picture. AIMA provides a structured way for organizations to evaluate their AI practices, benchmark maturity, and create a roadmap for improvement. Unlike many existing AI standards or maturity models, AIMA uniquely integrates security, governance, and ethics into a single, community-driven framework.

What Is the AI Maturity Assessment Framework?

At its core, AIMA helps organizations assess the maturity of their AI capabilities not just from a technical perspective but also from governance, trustworthiness, and operational standpoints.

The framework is structured across five core domains: Strategy, Design, Implementation, Operations, and Governance.

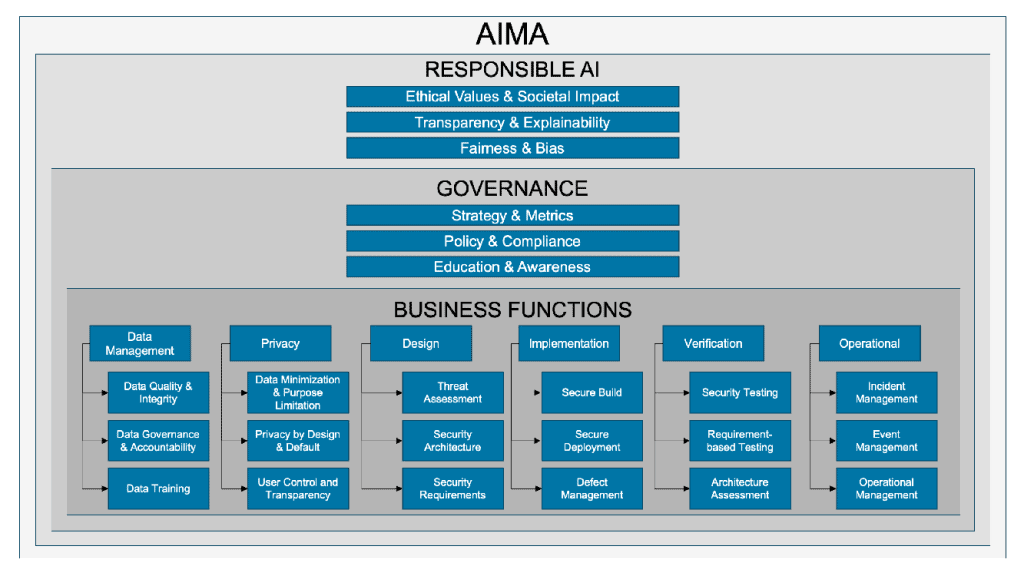

AIMA defines eight assessment domains that span the entire AI system lifecycle:

- Responsible AI Principles: Fairness, transparency, and societal impact.

- Governance: Strategy, policy, and education.

- Data Management: Quality, accountability, and training data practices.

- Privacy: Data minimization, privacy by design, and user control.

- Design: Threat modelling, security architecture, and requirements.

- Implementation: Secure build, deployment, and defect management.

- Verification: Testing and architecture validation.

- Operations: Monitoring, incident response, and system lifecycle management.

Each domain is mapped to maturity levels, ranging from Initial to Optimizing.

Figure 1: AIMA Model. Source: https://owasp.org/www-project-ai-maturity-assessment/

For example, a company at the Initial level in the Operations domain might deploy AI models without systematic monitoring for drift or adversarial attacks. At the Optimizing level, by contrast, the same company would have automated dashboards that track bias, accuracy, and security risks in real time, feeding directly into incident response plans.

This maturity-based approach enables organizations to benchmark where they are today and set realistic goals for the future.

How AIMA Differs from Other AI Frameworks and Standards

There is no shortage of frameworks guiding AI adoption. ISO/IEC 42001:2023 introduces an AI Management System standard, while NIST provides its AI Risk Management Framework. Gartner and McKinsey, meanwhile, offer AI maturity models to help organizations scale adoption. So why another framework?

Key differentiators of AIMA include:

- Holistic coverage: Unlike ISO or NIST frameworks that focus primarily on risk management or compliance, AIMA integrates security, ethics, and lifecycle governance in one place.

- Maturity-driven roadmap: Instead of prescriptive checklists, AIMA allows organizations to evolve progressively. For instance, a retailer may start with manual bias testing (Defined level) before moving to automated fairness auditing across product recommendation engines (Quantitatively Managed).

- Community and adaptability: True to OWASP’s roots, AIMA is open-source and community-driven. Organizations can adapt it to their specific contexts and even contribute to its evolution.

- Built for practitioners: AIMA provides practical toolkits—such as downloadable Excel self-assessment sheets—making it accessible for organizations without deep in-house AI expertise.

Think of it this way: if ISO is about compliance, and NIST is about risk, then AIMA is about continuous, practical maturity across the AI lifecycle.

The Ease and Challenges of Implementation

On paper, AIMA is designed to be accessible. The toolkit approach means organizations can self-assess maturity levels without requiring external consultants. For example, a mid-sized bank experimenting with AI-powered credit scoring could use the toolkit to evaluate its maturity in areas like explainability, bias detection, and adversarial robustness.

However, implementation challenges do exist:

- Cross-functional demands: AIMA isn’t just for data scientists. It requires input from multiple stakeholders, including compliance officers, cybersecurity teams, operations managers, and business experts critical for an AI project’s success, such as HR to address workforce AI training and hiring.

- Cultural readiness: Achieving higher maturity levels often means shifting organizational culture. A company that traditionally prioritizes speed-to-market may resist embedding rigorous explainability checks in its design process.

- Resource intensity: Moving from Defined to Quantitatively Managed maturity might require investment in monitoring platforms, adversarial attack simulations, or governance committees.

In short, AIMA is easy to start, but it needs commitment and consistency to move to the next levels, which is precisely what makes it valuable.

Scope of Application: Who Benefits from AIMA?

AIMA’s versatility is one of its biggest strengths.

- Cross-industry relevance: Whether healthcare providers ensure AI-driven diagnostics are explainable, logistics firms use AI for route optimization, or insurers adopt AI for claims processing, AIMA applies universally.

- Different organizational sizes: Although AIMA is mostly suitable for large organizations, businesses depending on their size can pick selectively which of the eight domains they would like to focus on to ensure responsible AI foundations.

- Regulators and auditors: AIMA offers a common language to evaluate AI deployments, bridging the gap between innovation and oversight.

For example, a public sector agency deploying an AI-based citizen services chatbot could use AIMA’s Governance and Ethics domains to demonstrate accountability, transparency, and compliance with the EU AI Act.

Five Steps for Organizations Implementing AIMA

So where should an organization begin? Here’s a practical roadmap:

1. Build Awareness and Alignment

Start with executive and stakeholder buy-in and then move on to ensure commitment from all stakeholders. Secure AI is a strategic goal not a siloed effort of the IT department.

2. Conduct a Baseline Self-Assessment

Evaluate current practices across the eight domains. A fintech might discover that while its Design maturity is strong (bias testing, explainability), its Operations maturity is weak (no incident response for AI model failures).

3. Define Priorities and Set Goals

Not every domain needs to advance at the same pace. Identify quick wins and high-impact areas. For instance, a retailer might prioritize improving Governance maturity to prepare for compliance with the EU AI Act.

4. Define Priorities and Set Goals

Embed Into Existing Systems

Integrate AIMA findings into broader frameworks like ISO/IEC 27001 (information security), DevSecOps pipelines, or enterprise risk management systems. This ensures AI maturity is not siloed.

5. Monitor, Iterate, and Share

Maturity is not static. Organizations should reassess regularly and share lessons learned with the wider community.

AIMA in Practice: An Example Scenario

Consider a European insurance firm adopting AI for fraud detection. Initially, its models are deployed ad hoc, without explainability or monitoring. After applying AIMA:

- At the Strategy level, leadership aligns AI adoption with customer trust as a core business driver.

- Under Design, the firm implements explainability techniques so customers understand why claims are flagged.

- In Implementation, adversarial testing ensures models are robust against manipulation.

- For Operations, the firm sets up dashboards to monitor drift and performance in real time.

- And in Governance, it creates an AI ethics board that reports to the executive committee.

Within 18 months, the firm can progress from “Defined” to “Quantitatively Managed” maturity, turning AI from a black-box risk into a trust enabler.

Final Thoughts

The OWASP AI Maturity Assessment is a movement towards responsible AI adoption. Its integration of security, governance, and ethics sets it apart from traditional frameworks, while its open-source roots ensure it remains adaptable and community-driven.

For organizations wrestling with AI’s risks and opportunities, AIMA offers a way to move beyond compliance and toward continuous maturity and resilience. The path may be challenging, but as OWASP reminds us, security and trust are never destinations—they are ongoing journeys.

If you’re building or deploying AI systems, now is the time to make AI assessment and evaluation a strategic habit. Don’t wait for regulation to dictate action. Use evaluation to sharpen your business decisions, improve product reliability, and strengthen stakeholder trust.

At code4thought, we help organizations design and run meaningful AI evaluations—covering performance, fairness, transparency, and security. Whether you’re preparing for regulatory compliance or striving for excellence in AI quality, our team can support you in turning insight into impact.