In Algorithms

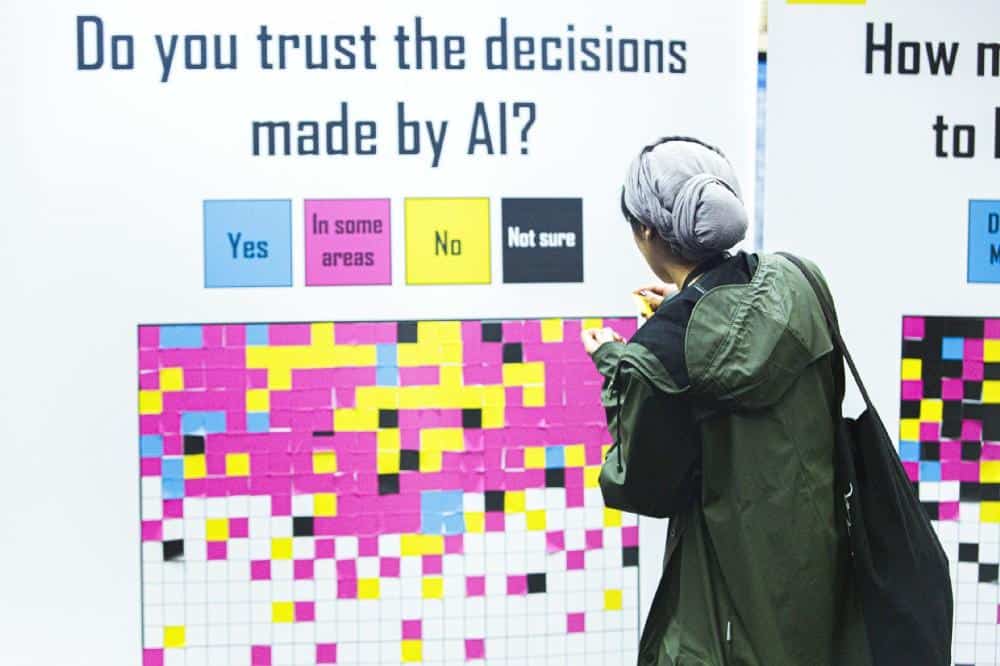

We Need To Trust?

Not There Yet

Artificial Intelligence is impacting our lives for good, so we need to take a closer look

In his book “21 lessons for the 21st Century”, historian Yuval Harari notes that “Already today, ‘truth’ is defined by the top results of the Google search.”. In a different vein, an academic, Professor Nicholas Diakopoulos suggests in his research work that authority is becoming increasingly algorithmic. Last week, journalist Karen Hao’s article about Facebook’s AI systems, reveals a shocking truth; tech organizations may not be in control of their AI (Artificial Intelligence) systems. What seems to be even more worrisome, is that tech organizations may not want to be in control of their AI if this impedes their growth, or profits (or both). This may not come as a surprise especially if we consider that the way systems are being built reflects the culture of their owner organizations.

There are a plethora of examples of AI systems going awry or getting faulty. For instance, Facebook admitted that its algorithms helped the spread of lies and hate speech fueling a genocidal anti-Muslim campaign in Myanmar for several years. Or, Amazon in 2018, withdrew its HR recruiting software as it seemed to be having a bias against women. Also, in late 2019 there were complaints about Apple’s credit card that seemed also to be having a similar bias by providing smaller credit lines to women than to men. To complement the picture on how big tech organizations control their AI systems, Google within the last two months fired two of their most prominent AI Ethics researchers.

I am fortunate enough to have found a just cause in my professional life, and so for more than eighteen years, I am in the business of helping people and organizations controlling and trusting large-scale software systems. This started with traditional, code-driven software systems in which the simplicity of their source code helps them become more transparent and less error-prone. Nowadays, trust is becoming even more crucial for AI systems. They tend to be less dependent on source code and much more on data, so their error-proneness and potential impact rely on how they treat those data. If we consider that data are socially constructed or simply put, data reflect how we, humans, live, then this means essentially two things:

- AI systems need to be designed in a way that ensures they’re transparent and fair,

- The organizations that own and operate AI systems should be mature enough for governing them responsibly.

All these beg several questions such as:

- What are the best practices when evaluating how an organization governs an AI system?

- Is there a golden standard for Transparent AI that can rule all explanations?

- We’re evaluating the fairness of an AI model in a quantitative way (i.e. bias testing), however, is this enough?

- How is it possible for an organization to find a balance between building Trustworthy AI versus optimizing for efficiency and for maximizing their business profits?

One may say that the above are mostly technical matters relevant only to engineers and coders. We beg to differ here as we, the humans need to impose the requirements on how an AI system should behave. For instance, if we as citizens or clients interact with an AI system we should be aware of it. If an algorithm decides over our credit line we should receive a fully comprehensive explanation concerning the deducted decision, especially if this decision is a negative one.

At Code4Thought we build technology that tests AI systems for the existence of bias and explains the reasons as to why they came up with a given result. In other words, we strive to give people an instrument with which they can evaluate whether an AI system can be trusted or not.