AI Quality Testing platform

AI Quality Testing platform

Ensure Quality & Trust in your AI systems effortlessly

iQ4AI is your go-to intelligence platform for comprehensive AI Quality Testing, fully aligned with international standards and regulations.

In-Depth AI Evaluation for Performance and Compliance

Our cutting-edge AI-testing platform offers robust and user-friendly tools for in-depth analysis of AI models and data, fully aligned with the ISO 29119-11 standard for AI Systems Testing.

iQ4AI provides comprehensive performance assessments and trustworthiness evaluations, including fairness, bias, and transparency analysis, helping you understand your AI system in full. With clear, actionable insights and an intuitive interface, the

iQ4AI platform enhances usability while building a detailed AI risk profile. This ensures your AI operations remain trustworthy, efficient, compliant, and aligned with your specific business goals.

Functions

Effortlessly explore key quality aspects of your AI models & algorithms to enhance performance and trustworthiness.

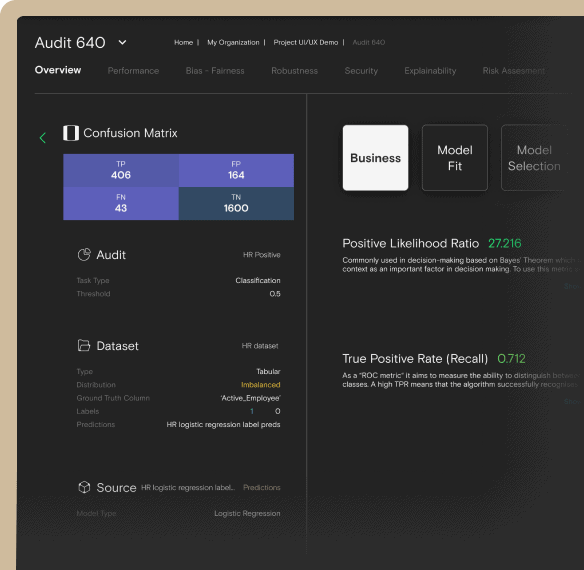

Audit & Monitoring Dashboard

iQ4AI offers a comprehensive, early-stage functional quality monitoring solution independent of

complex MLOps standards and processes.

The platform supports the model selection process in

production by optimizing models based on business KPIs, maintaining a detailed audit trail for

each modeling process, and facilitating multi-disciplinary governance participation.

It acts as a centralized source for tracking model quality, health, performance, drift,

and bias across all models in production.

Key Benefits:

- Detect model and data drift over time to ensure integrity.

- Consolidate reports for shared insights on model quality, behavior and performance.

- Tailor insights for compliance, revenue, and cost management.

- Facilitate collaboration across teams with a centralized model view.

- Identify risks in model quality, performance and data integrity.

- Pinpoint root causes of performance or drift issues to refine models.

- Leverage actionable insights to enhance models based on key features.

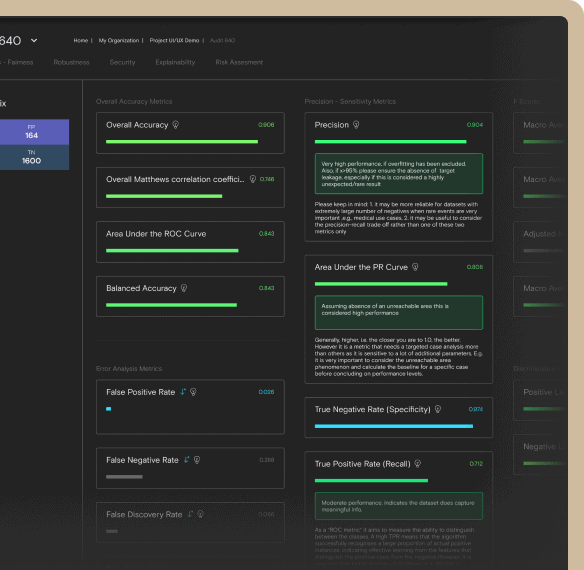

Model Performance

Evaluation

Assess how effectively AI models perform their intended tasks, ensuring they are ‘fit-for-purpose’ for the business problems they are designed to solve.

Through multiple stages of the modeling process and across diverse inputs, we evaluate performance using metrics aligned with ISO 4213 standards.

Our platform offers an exploratory data view highlighting potential dataset deficiencies like data drift, outliers, and hidden bias, ensuring the model remains robust.

The user is guided through a comprehensive and intuitive analysis using traditional and innovative techniques tailored to the specific business problem, data, and technical requirements. Multiple analysis approaches can be accessed simultaneously, providing flexibility for deeper insights.

Key Benefits:

- Assess models for business relevance at every stage.

- Identify and address data integrity & bias deficiencies

- Ensure models are compliant and audit-ready.

- Provide stakeholders with in-depth model interpretation and control.

- Mitigate risks from model degradation or bias.

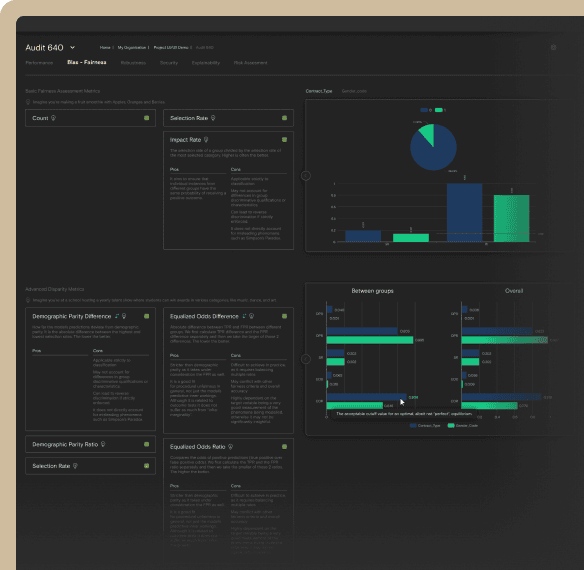

Fairness /

Bias Testing

Ensure your AI system treats all user groups equitably, avoiding biased decision-making and maintaining regulatory compliance.

Our platform evaluates fairness using metrics that adhere to laws and standards like the New York City Bias Law and the ISO29119-11 respectively. With recurrent assessments, users can explore how their model handles sensitive traits and ensure sustained diversity and inclusivity compliance.

The platform guides users through well-established and innovative intersectional fairness metrics, allowing them to analyze the performance-fairness tradeoff and view fairness across protected groups in an intuitive dashboard.

Metrics like disparate impact, group benefit, equal opportunity, and demographic parity provide insights to detect and mitigate bias while optimizing model performance.

Key Benefits:

- Ensure compliance with diversity and inclusivity regulations.

- Monitor fairness for all protected groups throughout the ML lifecycle.

- Balance performance and fairness with interactive, intuitive metrics.

- Detect intersectional unfairness and algorithmic biases.

- Boost confidence in model outcomes with comprehensive fairness checks.

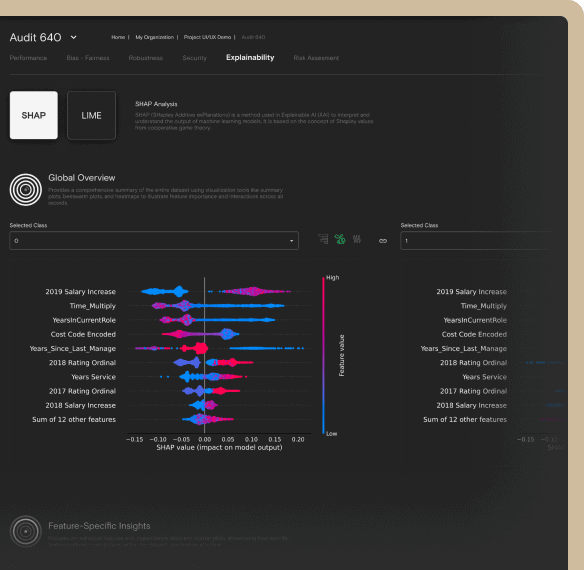

Transparency/

Explainability Analysis

Ensure a clear understanding of how AI systems make decisions by providing human-readable explanations of individual and global predictions.

Using a mix of well-established and proprietary methods, including LIME, Shapley Values, and our proprietary MASHAP, as well as visual tools like partial dependence plots, the analysis highlights which features are the strongest predictors and how different combinations drive model behavior.

This approach combines model-agnostic and model-specific techniques, offering nuanced insights that help stakeholders and regulators understand and validate AI model outcomes.

These insights support business decision-making, anticipate model generalization, and reveal potential hidden biases.

Key Benefits:

- Gain transparent, human-readable explanations of AI decisions.

- Understand model behavior at both global and local levels.

- Visualize key features driving predictions with graphical explanations.

- Ensure regulatory compliance and build stakeholder confidence.

- Anticipate model generalization and uncover hidden biases.

Features

Designed with your workflow in mind, so as to stay on track without getting overwhelmed

General Features

Secure Access

Role-based access control (RBAC) for secure permissions management.

Containerized

Deployed in Docker containers for flexibility.

On-Prem Support

Fully supports on-premise deployments.

Audit comparability

Track and compare key decisions and insights over time.

Curated metrics

Tailored metrics with definitions and diagnostic messages for business relevance.

Reporting

Generate detailed reports comparing audits across model evolutions.

Performance Features

Performance Monitoring

Track model accuracy & efficiency with pre-built metrics.

Data Drift Detection

Compare & Investigate data distributions to monitor data drift

Class Imbalance Detection

Identify low-frequency prediction changes.

Feature Quality Checks

Detect integrity issues like missing values and range violations.

Fairness Features

Algorithmic Bias Detection

Visualize and measure algorithmic bias.

Intersectional Bias Detection

Analyze bias across multiple dimensions (e.g., gender, race).

Dataset Fairness

Ensure fairness in datasets before training by balancing feature dependencies.

Fairness Metrics

Use built-in fairness metrics like demographic parity and equal opportunity.

Model Fairness

Compare fairness across model outcomes for different subgroups.

Explainability Features

SHAP, MASHAP & LIME

Enhance model transparency.

‘What-if’ analysis

Explore model outcomes by changing values and observing impacts.

Global and local explanations

Understand how features impact predictions on a macro and micro level.

Surrogate models

Automatically generate interpretable models before production.

Analytics Features:

Dashboards and charts share insights and make models understandable, while models are validated for performance before deploying into production.

For Who

Foster cross-team collaboration with a platform that provides clear, actionable insights for data scientists, compliance officers and business leaders.

Data Scientists and AI Engineers:

Innovators building the future of AI. Use our tool to ensure your models perform at their peak, free from bias, and secure by design.

Governance, Risk, and Compliance Teams:

Champions of ethics and regulation. Easy to monitor and enforce AI compliance, ensuring every model adheres to the highest standards, such as the EU AI Act, GDPR, and ISO Standards.

CIOs and CTOs:

Visionaries leading technology strategy. Leverage insightful, high-level reporting to make informed, strategic decisions on AI deployment that drive business success.

Product Managers & AI Operators:

Trailblazers optimizing AI within products. Identify and resolve performance issues swiftly, keeping your AI-powered solutions running at full potential.

Technology Consultants:

Experts shaping AI in regulated industries. Use our platform to deliver superior AI solutions, enhancing model quality and compliance for your clients.

AI Model Auditors and QA Professionals:

Guardians of quality and fairness. Harness powerful, standardized testing to ensure AI models meet ethical and performance benchmarks over time.

Why iQ4AI

code4thought makes AI technology trusted and thoughtful, building a future where AI works smarter, ethically, and transparently.

We’re driven by international AI quality testing standards and a commitment to excellence, ensuring our platform supports teams of all expertise levels with an intuitive interface that enhances usability.

Designed for seamless collaboration across multidisciplinary teams, our tool is easily upgradeable,

ensuring you are always at the forefront of AI innovation without disruption.

The smooth integration with CI/CD pipelines provides a crucial feedback loop that guarantees

trustworthy and reliable AI before deployment.

iQ4AI is accompanied with service-inspections in order to help your team optimize the use of our platform, offering dedicated support and training to maximize its effectiveness.

Additionally, we offer customized consultancy services through our Trustworthy AI consultancy to provide tailored solutions so as to align with your unique business needs, ensuring trustworthy and reliable AI operations before deployment.

Benefits

Tailor your AI systems to meet your specific business challenges, while mitigating risks and ensuring compliance with industry and regulatory standards.

Automated Efficiency

Streamline repetitive testing tasks, reducing time and improving team alignment for more efficient workflows.

Continuous Improvement

Support continuous testing and optimization practices, increasing resource efficiency, boosting release throughput, and driving talent satisfaction.

Centralized Control

Manage AI testing strategies with a unified platform for test data selection, model artifacts, metric analysis, and actionable insights based on industry-proven methodologies.

Consistency & Reliability

Ensure uniform testing processes over time, reducing administrative overhead, enhancing reproducibility, and ensuring more reliable results.

Compliance & Risk Alignment

Complement compliance efforts with risk-based approaches that naturally integrate business objectives with research and innovation.

Bundles

Basic

AI Testing and Auditing Tool:

access to audit up to 5 AI models or systems.

Min. Duration:

12 months

Inspections:

10 inspections

Suitable for organizations needing periodic reviews of a few AI systems with limited complexity.

Extended

AI Testing and Auditing Tool:

access to audit up to 15 AI models or systems.

Min. Duration:

12 months

Inspections:

20 inspections

Ideal for organizations needing regular reviews of AI systems with moderate complexity.

Advanced

AI Testing and Auditing Tool:

Access to audit an unlimited number of AI models or systems.

Min. Duration:

12 months

Inspections:

30 inspections

+ 5 advanced inspections

Designed for large enterprises with extensive AI deployment.

Each Inspection includes:

- Foundation analysis (Performance, Fairness): Automated evaluation of the model or system.

- Results interpretation: Reporting via the platform (no additional deliverables).

- Client interaction: Up to 2 time-boxed sessions

Each Advanced Inspection includes:

- Advanced analyses (Performance, Fairness, Explainability Analysis), results interpretation, and reporting via platform.

- Client interaction: Up to 4 time-boxed sessions

FURTHER READING

The Quality Imperative: Why Leading Organizations Proactively Evaluate Software and AI Systems with Yiannis Kanellopoulos, hosted by George Anadiotis

In an increasingly AI-driven world, quality is no longer just a technical metric—it's a strategic imperative. In this episode of...

Read MoreISO 42001 Advisory Form -Evaluate Your Readiness for Responsible AI Governance

As AI systems become more central to business operations, the need for formalized, accountable, and ethical AI practices has never...

Read Morecode4thought at Two Major International AI Conferences This April

We are proud to announce that our CEO, Yiannis Kanellopoulos, will be representing code4thought at two prominent international events this...

Read More