monthly insider

Generative AI Quality Testing:

A Standards-Based Approach for Reliability

19/02/2025

19 MIN READ /

Generative AI (GenAI) is revolutionizing industries ranging from content generation and software engineering to healthcare and finance. Unlike traditional AI systems, GenAI models generate dynamic outputs, introducing significant challenges in testing and validation.

Integrating retrieval-augmented generation (RAG) and autonomous AI agents within GenAI models further amplifies complexity, necessitating well-defined, structured evaluation frameworks. Rigorous testing methodologies are critical to ensuring reliability, security, and compliance.

AI Testing: A Regulatory and Technical Perspective

AI testing is foundational to ensuring compliance, robustness, and fairness in GenAI-driven applications. Standards such as ISO 42001 mandate structured testing, human oversight, and risk mitigation strategies for responsible AI deployment. Organizations must implement fairness principles throughout the AI lifecycle—from data acquisition and preprocessing to model training and post-deployment validation.

Additionally, ISO 5538 emphasizes extending traditional software lifecycle processes to accommodate AI-specific characteristics, particularly data-intensive testing and real-time performance monitoring. Concurrently, the EU AI Act enforces stringent compliance requirements for high-risk AI systems, mandating risk management frameworks, dataset validation, and transparency measures.

These regulatory directives reinforce the necessity of AI testing frameworks that incorporate trustworthy considerations, documentation, and proactive risk mitigation strategies.

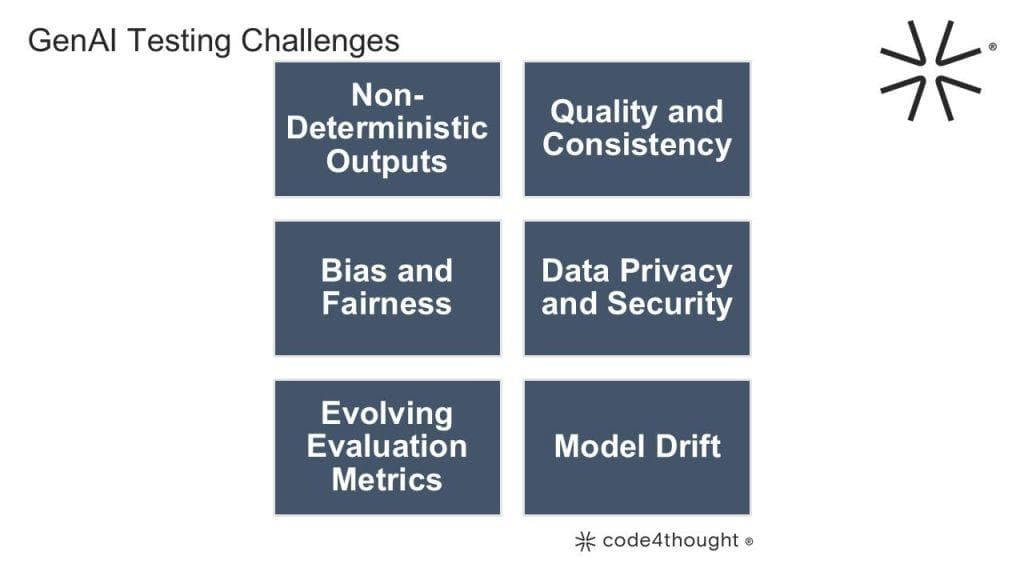

Challenges in Testing Generative AI Applications

Testing Generative AI (GenAI) applications differs significantly from traditional AI systems due to their dynamic nature and the complexity of evaluating outputs across a broad range of potential responses. Here are some key challenges:

A single, universally accepted “true” response is not available in most cases

By design GenAI models are expected to be able to produce diverse responses to the exact same input at any specific point in time. This variability complicates testing, as it requires assessing multiple plausible outputs rather than a single expected result.

Quality and Consistency

Ensuring that GenAI-generated content is accurate, contextually relevant, and free from factual errors is critical. The challenge lies in defining and measuring what constitutes “acceptable” quality, especially when subjective elements such as creativity and tone are involved.

Bias and Fairness

GenAI models inherit biases present in their training data, potentially leading to biased, unfair, or inappropriate outputs. Testing must include robust fairness assessments and mitigation strategies to prevent the reinforcement of harmful biases.

Data Privacy and Security

Security is a major concern, as GenAI models can inadvertently expose sensitive or proprietary information. Testing protocols must ensure that models do not memorize and bring up confidential data and are resistant to data poisoning attacks.

Evolving Evaluation Metrics

Traditional AI evaluation metrics, such as accuracy and F1 scores, may not suffice for GenAI. Organizations are developing new benchmarks to assess attributes like reasoning, adaptability, and creativity. For example, real-world problem-solving tests are increasingly used to evaluate AI performance in practical applications.

Model Drift

Over time, GenAI models may experience “drift,” similarly to any ML model, where their outputs deviate from expected behavior due to new training data or changing usage patterns. However, identifying the drift and its root causes accurately is much more difficult compared to the ML paradigms. Continuous monitoring and fine-tuning are essential to maintain reliability and performance.

In contrast to traditional AI systems, which often focus on precision and performance within well-defined parameters, GenAI testing always demands a more flexible and dynamic approach.

Intricacies of GenAI Testing – The Deterministic Software Perspective

GenAI testing in software engineering presents unique challenges due to its dynamic nature and the need for robust infrastructure. Key aspects include:

Balancing Trial & Error with Scenario-Based Testing

Unlike deterministic software testing, GenAI testing requires a mix of volume-based experimentation and structured scenario testing to account for unexpected behaviors and edge cases.

Hardware and Computational Constraints

Large-scale GenAI models demand significant computing power, making testing resource-intensive. Efficient use of computing resources, model compression techniques, and distributed testing strategies are critical.

User Diversity in Design and Tuning

User behavior significantly impacts GenAI performance. Testing must account for diverse user demographics, language variations, and domain-specific nuances to optimize real-world applicability.

Testing in Production

Due to the unpredictability of user inputs and evolving use cases, real-world testing is indispensable. Continuous monitoring, A/B testing, and adaptive learning mechanisms help refine performance over time.

Acceptable Behavior Thresholds

Unlike traditional software testing, where pass/fail criteria are binary, GenAI testing often requires defining acceptable behavior ranges. Some error tolerance is necessary to allow for greater scenario coverage and flexibility in response generation.

Intricacies of GenAI Testing – The ML/DL Perspective

From a machine learning (ML) and deep learning (DL) standpoint, GenAI testing involves significant architectural and methodological considerations:

Sensitivity to Architecture Choices

The performance and learning capacity of large language models (LLMs) depend heavily on their architecture. Factors such as tokenization strategy, layer depth, and model fine-tuning parameters significantly impact output quality.

Complex Input and Output Dependencies

Testing GenAI models requires evaluating multiple interdependent components, including embedding models, indexing strategies, chunking mechanisms, filtering systems, and security guardrails. Each of these elements influences overall model behavior.

Dynamic Training Datasets

Unlike traditional ML models trained on predefined datasets, GenAI models often require multiple dataset types for pre-training, fine-tuning, and reinforcement learning. Testing must verify dataset integrity and balance across different data sources.

LLMs Store Probabilities, Not Facts

Since LLMs generate text based on probability distributions rather than factual knowledge, their outputs may appear confident but be factually incorrect (hallucinations). Fact-checking mechanisms and retrieval-augmented generation (RAG) techniques are essential for improving accuracy.

Misalignment Between Evaluation Metrics and Business Goals

Standard ML performance metrics may not always align with business or user expectations. Metrics such as BLEU and ROUGE scores may provide incomplete assessments, necessitating alternative evaluation frameworks incorporating human judgment and real-world effectiveness.

Reconciling Human Feedback with Automated Metrics

Automated pass/fail assessments may diverge from human evaluations, especially in subjective domains such as creative writing, code generation, or summarization. Hybrid evaluation approaches—combining human-in-the-loop feedback with quantitative benchmarks (e.g. generated source code can be evaluated using industry accepted code quality metrics such as cyclomatic complexity alongside with peer reviews)— help bridge this gap.

Evaluating Generative AI Applications: A Structured Methodology

Ensuring the reliability, accuracy, and ethical integrity of Generative AI (GenAI) applications requires a structured approach to evaluation. Given the non-deterministic nature of these systems and their reliance on vast external datasets, a robust methodology is essential to maintaining performance, fairness, and security.

Key Evaluation Pillars

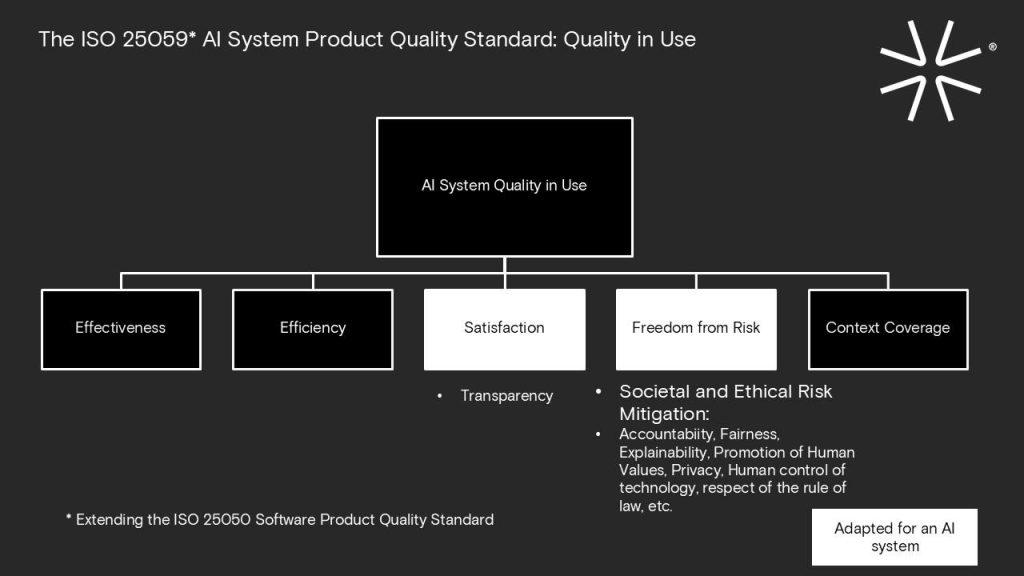

Evaluation must be based on ISO 25059 criteria for system quality, adapted to the specificities of GenAI systems.

More specifically, the following factors should be the foundation for evaluating GenAI applications.

Data Quality Testing: The effectiveness of a GenAI system heavily depends on the quality of its training and external data sources. Poor data quality can propagate errors, biases, and inconsistencies into generated outputs. Testing must verify:

- Accuracy: Ensuring factual correctness in retrieved or generated content.

- Consistency: Checking for stable performance across different queries and use cases.

- Relevance: Ensuring that data aligns with the intended context and domain-specific requirements.

Performance Evaluation: The integration of retrieval-augmented generation (RAG) and external databases can significantly impact a system’s responsiveness and resource utilization. To maintain efficiency, testing should include:

- Load Testing: Evaluating how the system performs under peak loads to prevent latency issues.

- Scalability Analysis: Assessing whether the model can handle increased query volumes without degradation.

- Computational Cost Optimization: Ensuring optimal use of hardware resources to balance performance and cost.

Bias and Fairness Assessment: Bias in GenAI models can stem from training data, retrieval mechanisms, or fine-tuning processes. To mitigate bias and ensure ethical AI outputs:

- Bias Detection: Using fairness metrics and diverse test cases to identify unintended biases.

- Mitigation Strategies: Applying bias-correction techniques such as re-weighting, adversarial debiasing, or reinforcement learning from human feedback (RLHF).

- Diversity Testing: Ensuring that responses remain inclusive across different demographic, cultural, and linguistic inputs.

Continuous Monitoring & Regression Testing: As external data sources evolve, a GenAI system’s performance and output consistency can drift over time. To prevent unintended degradation:

- Automated Regression Testing: Detecting inconsistencies introduced by model updates or dataset changes.

- Behavioural Drift Analysis: Monitoring shifts in model behaviour due to new user interactions or external data updates.

- Feedback Loops: Incorporating real-world user feedback to iteratively refine model performance.

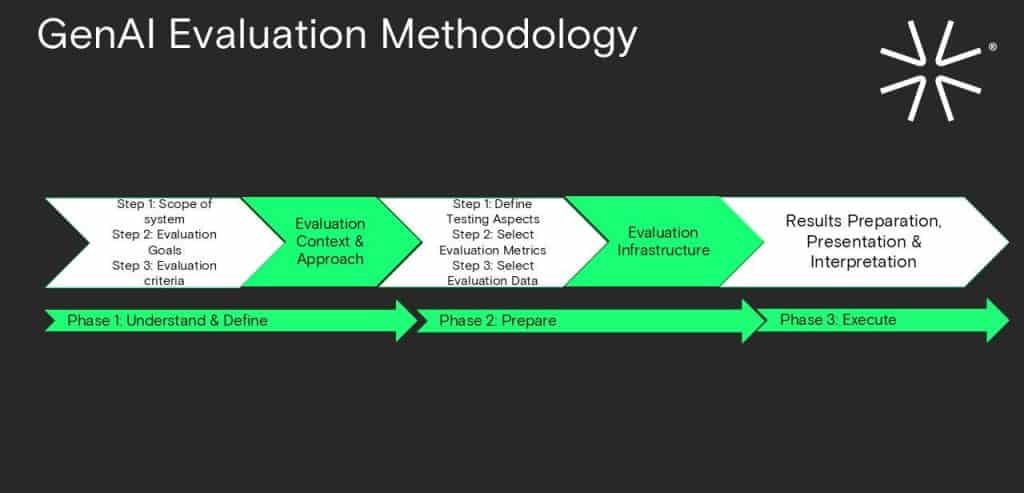

Elements of a Robust Testing Methodology

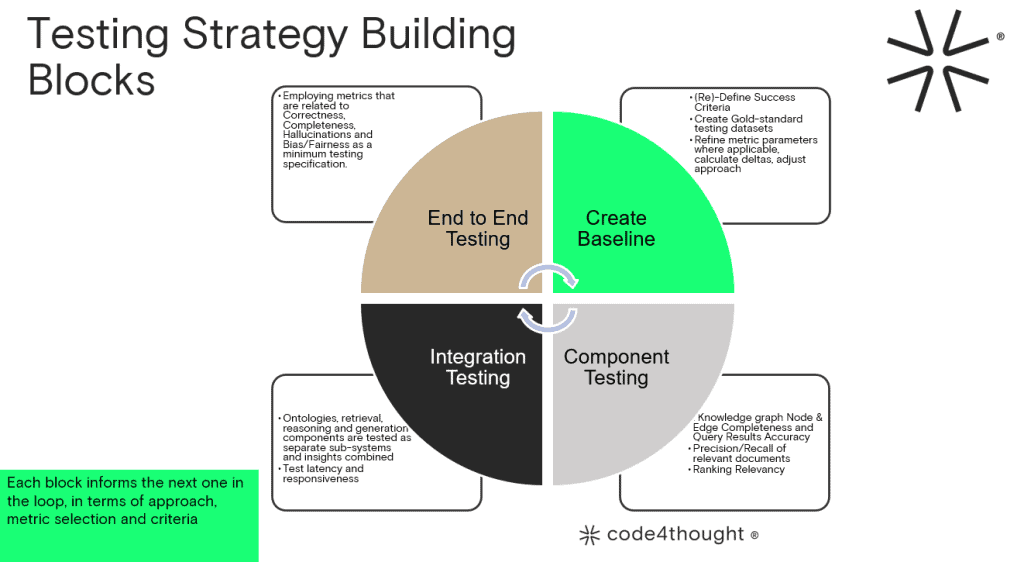

Testing GenAI applications requires a hybrid approach that combines automation, human oversight and continuous monitoring. code4thought’s methodology follows a phased approach, as shown in the diagram below.

Our methodology is based on key strategies that enhance the reliability of GenAI systems:

1. Automated Testing Frameworks

Automated tools streamline the testing lifecycle, enabling scalable and repeatable validation processes. Key frameworks include:

- TensorFlow Extended (TFX) for end-to-end ML pipeline validation, including data validation, model analysis, and deployment monitoring.

- MLflow for experiment tracking, model versioning, and performance benchmarking across different iterations.

- Great Expectations for automated data quality testing and anomaly detection.

2. Human-in-the-Loop (HITL) Testing

While automation enhances efficiency, human evaluators play a critical role in assessing nuanced aspects of GenAI outputs:

- Contextual Relevance Assessment: Ensuring responses align with domain-specific requirements and user expectations.

- Ethical & Compliance Audits: Evaluating AI-generated content against regulatory and ethical standards.

- Edge Case Identification: Detecting failures in complex or ambiguous scenarios that automated tests may overlook.

3. Continuous Monitoring & Adaptive Feedback Loops

Real-world performance assessment is critical for maintaining long-term reliability. A continuous feedback loop ensures the model evolves with emerging data and use cases. This involves:

- Real-Time Performance Metrics: Tracking response accuracy, latency, and system reliability under dynamic conditions.

- User Interaction Insights: Capturing user feedback and engagement metrics to refine model behaviour.

- Automated Drift Detection: Identifying significant deviations in model performance over time and implementing corrective measures.

The following diagram provides an overview of how these strategies integrate into code4thought’s comprehensive approach to GenAI testing.

Additional Security Considerations

Incorporating red teaming and adversarial testing alongside the described testing framework is a best practice for enhancing security, ensuring AI systems are robust against potential vulnerabilities and threats. Security and risk assessments are essential to prevent exploitation or misuse of GenAI systems. Red teaming strategies involve:

- Simulating Cyber Attacks: Identifying vulnerabilities such as prompt injection attacks, data leakage, and adversarial exploits.

- Toxic Content Testing: Stress-testing the model against harmful or unethical prompt injections to prevent biased or misleading outputs.

- Guardrail Validation: Verifying that filters and safety mechanisms function effectively without over-restricting valid responses.

Adapting Testing Strategies to System Architecture

The effectiveness of Generative AI (GenAI) testing is closely tied to the underlying system architecture. Different architectures require tailored testing methodologies to address their unique challenges. For example, the adoption of Retrieval Augmented Generation (RAG) systems and AI agents creates both new opportunities but also introduce distinct testing challenges.

RAG-based GenAI models rely on external knowledge sources to enhance their responses, making their evaluation more complex than traditional AI models. Testing must address both functionality and security. Functional testing ensures the system accurately retrieves, integrates, and synthesizes external data without introducing inconsistencies or misinterpretations. Key considerations for testing RAG models include:

- Assessing how well the retrieval mechanism selects the most relevant data for a given input,

- Ensuring that the system maintains coherence when combining retrieved information with generated content, and

- Evaluating the speed and efficiency of retrieval operations, particularly under high-load conditions.

On the other hand, adversarial testing identifies vulnerabilities in the retrieval process that could be exploited through manipulative inputs. This includes how the system reacts to prompt injection attacks and data poisoning risks. It is also equally essential to include fact-checking mechanisms to prevent the model from amplifying misinformation.

In the case of AI agents, hailed by some as the AI trend of 2025, they offer new opportunities for enhancing GenAI testing but also introduce distinct challenges. Two key areas of impact include enhanced test automation and continuous testing.

AI agents can dynamically generate test cases by analysing the system’s behaviour, creating diverse scenarios, including edge cases that might not be captured through manual testing. In addition, AI-driven testing frameworks can leverage machine learning techniques to identify evolving patterns in system behaviour and adapt testing strategies accordingly. This ensures that the GenAI model remains resilient to changes in user interactions and external data updates.

However, AI agents also present challenges that must be accounted for:

- Despite their automation capabilities, AI agents are only as reliable as the data they are trained on. Without rigorous oversight and validation mechanisms, there is a risk of propagating errors or reinforcing biases in testing outcomes.

- AI agents require substantial computational resources, and their autonomous nature introduces potential security risks. For instance, collusion risks could emerge if multiple AI agents interact in unintended ways, making it difficult to assign accountability for unexpected failures or harmful outputs.

A Business-Oriented Approach

The adoption of GenAI presents unparalleled opportunities alongside novel risks. As businesses integrate these systems, robust evaluation frameworks become imperative for ensuring reliability, trust, and compliance.

code4thought’s structured testing methodology offers a comprehensive framework for GenAI validation, that includes the strategies discussed in this blog.

For organizations seeking expert-driven GenAI evaluation solutions, code4thought provides tailored testing methodologies to align GenAI implementations with business and regulatory requirements. Contact us today to explore how our expertise can help you test and optimize your GenAI systems for enhanced performance, security, and compliance.